Optimizing

Navigation Performance

in Web Apps

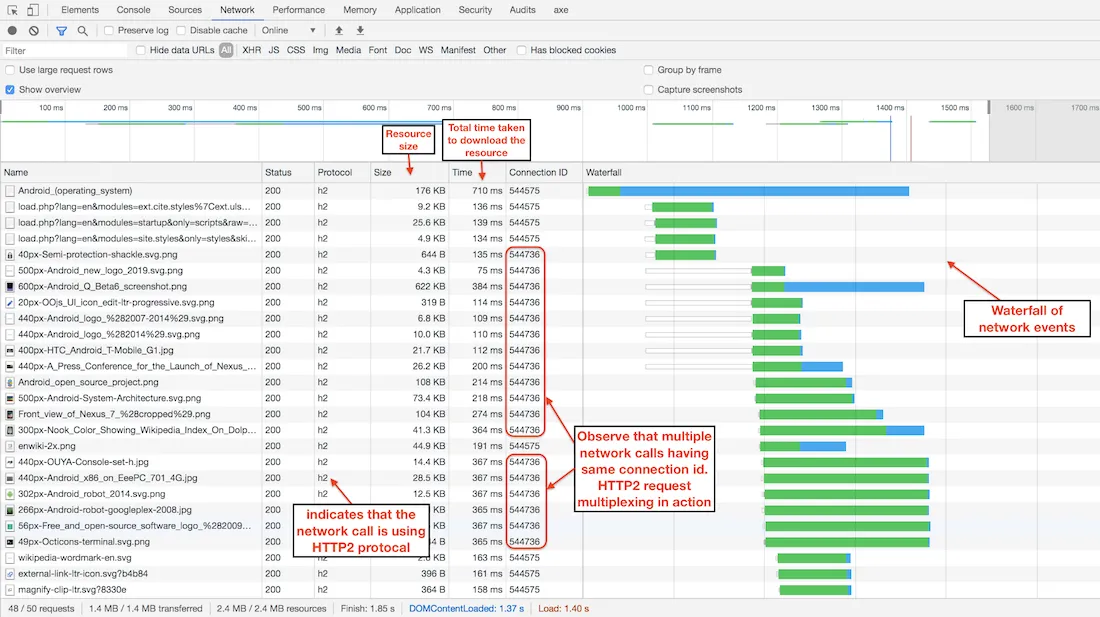

The Loading Waterfall Problem

- What is it? Sequential data fetching causes each request to wait for the previous one to finish.

- Impact: Dramatically increases total page load time—users wait longer to see content.

- Common Causes: Nested API calls, component-level fetches, poor data architecture.

Client-Side Data Fetching Overhead

import axios from "axios";

import { useEffect, useState } from "react";

const ClientFetchDemo = () => {

const [data, setData] = useState([]);

const [loading, setLoading] = useState(true);

useEffect(() => {

axios.get("https://reqres.in/api/unknown")

.then(res => setData(res.data.data))

.finally(() => setLoading(false));

}, []);

if (loading) return <p>Loading...</p>;

return (

<ul>

{data.map(item => (

<li key={item.id}>{item.name}</li>

))}

</ul>

);

};

export default ClientFetchDemo;

- Slower initial loads: UI waits for JS bundle and then fetches data, delaying content display.

- Extra network round trips: Browser loads app, then makes API calls, increasing latency.

- Larger JS bundles: More logic shipped to client, slowing down page load and execution.

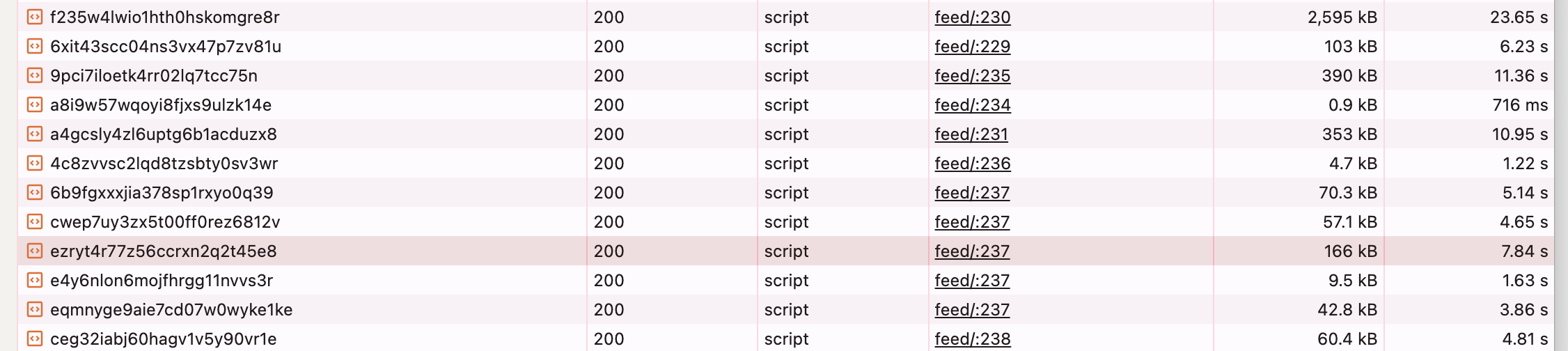

JavaScript Bundle Fetching Problem

- Large Bundles: Modern apps often ship hundreds of KBs of JS by default.

- Slow First Paint: Browsers must download, parse, and execute all JS before rendering UI.

- Performance Penalty: Increased Time to Interactive (TTI) and poor Core Web Vitals.

Loading Data...

How do we make our apps faster?

One Roundtrip Per Navigation

- Instead of: URL → Code → Fetch → Render

- Do this: URL → [Code + Data] → Render

- Server knows what to send based on route

- This enables faster, flicker-free navigation

/* Instead of this waterfall */

navigate('/dashboard') →

load Dashboard.js →

fetch('/api/dashboard-data')

Server Loaders: Break the Waterfall

- Loaders are tied to routes - fetch route-specific data before render

- Executed on the server (SSR) or at navigation time (SPA)

- No useEffect. No extra fetch. No waterfall.

// React Router 6.4+

const router = createBrowserRouter([

{

path: "/invoices/:id",

loader: async ({ params }) => {

return fetch(`/api/invoices/${params.id}`);

},

element:

// Remix example

export async function loader({ params }) {

const invoice = await getInvoiceById(params.id);

return json(invoice);

}

Navigation triggers data + code load in one go

React Server Components

- Each component = its own server-side data loader

- Server starts rendering from the top: no client-side fetch

- Data dependencies live alongside components (not centralized)

- Client gets a preloaded tree = no JS waterfall

// Server Components

async function PostContent({ postId }) {

const post = await loadPost(postId);

return (

<article>

<h1>{post.title}</h1>

<p>{post.content}</p>

<Comments postId={postId} />

</article>

);

}

async function Comments({ postId }) {

const comments = await loadComments(postId);

return (

<ul>{comments.map(c => <li key={c.id}>{c.text}</li>)}</ul>

);

}

Result: Navigation triggers a single streamed tree with code + data

Prefetching: Eliminate Perceived Latency

- Prefetch assets and data for likely next routes

- Happens on hover, viewport entry, or predictive heuristics

- Frameworks like Next.js, Remix, and React Router automate this

- Preloads code and data → instant-feeling navigation

// Next.js Link (auto prefetches on hover or visible)

import Link from 'next/link';

<Link href="/dashboard">Go to Dashboard</Link>

// Remix prefetch hint

<Link to="/profile" prefetch="intent">Profile</Link>

Result: Code + data already loaded by the time you click ⚡

Persistent Layouts: Don't Tear Down Everything

- Shared UI (e.g. navbars, sidebars) should not re-render on route change

- Reduces UI flicker and improves perceived speed

- Preserve state (scroll, form drafts, etc.) across pages

// React Router

<Layout>

<Navbar />

<Sidebar />

<Outlet />

</Layout>

// Next.js App Router

// app/layout.tsx

export default function Layout({ children }) {

return (

<main>

<Navbar />

<Sidebar />

{children}

</main>

);

}

Result: Navigations feel like content swaps, not full reloads ✨

Fix your bundle size :)

Use Smaller Libraries

- Swap heavy libraries with lighter alternatives

- Helps reduce bundle size without refactoring logic

// Instead of moment.js (~300KB)

import dayjs from 'dayjs';

// Instead of lodash

import debounce from 'just-debounce-it';

Result: Smaller bundle, faster load — no effort wasted 🎯

Defer Non-Critical JavaScript

- Load features like analytics, chat, and widgets after paint

- Use

<script defer / async>or load on user interaction - Next.js: use

<Script strategy="lazyOnload" />

// Next.js Script defer example

import Script from 'next/script';

<Script

src="https://analytics.example.com/script.js"

strategy="lazyOnload"

/>

Result: Critical path stays light, TTI improves ⚡

Set Performance Budgets

- What is it? A performance budget sets thresholds for metrics like JS size, TTI, LCP

- Helps catch regressions early in CI/CD pipelines

- Makes performance a shared goal across teams

// Webpack Plugin Example

new BundleAnalyzerPlugin({

generateStatsFile: true,

statsOptions: { source: false },

})

Result: Prevent slow pages before they reach production 🚨

Build for Speed by Default 🚀

- Optimize for minimal roundtrips per navigation

- Use server-side data fetching where possible

- Implement prefetching and persistent UI patterns

- Keep JavaScript small, efficient, and deferred

- Make performance a culture, not just a one-time fix

Fast apps = happy users = better business 💸